Author: Teng Yan, Chain of Thought; Translation: Golden Finance xiaozou

I have a big regret that I still have It bothers me that it's undoubtedly the most obvious investment opportunity to anyone paying attention, but I haven't invested a penny in it. No, I’m not talking about the next Solana killer or a dog meme coin wearing a funny hat.

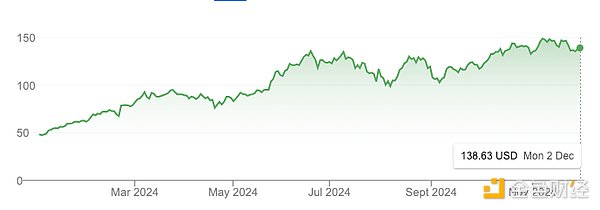

But... NVIDIA.

In just one year, NVDA’s market value went from $1 trillion to Soared to 3 trillion US dollars, an increase of 3 times, even surpassing Bitcoin in the same period.

Of course there is artificial intelligence hype, but a large part of it has a basis in reality. NVIDIA announced that its revenue for fiscal year 2024 was US$60 billion, an increase of 126% from fiscal year 2023, an astonishing performance.

Then why did I miss it?

In the past two years, I have been focusing on the encryption field and have not looked at the outside world or paid attention to the field of artificial intelligence. I made a huge mistake that still haunts me today.

But I won't make the same mistake again.

Today, Crypto AI feels very similar. We are on the verge of an explosion of innovation. This is too much like the California Gold Rush of the mid-19th century to ignore—industries and cities sprang up overnight, infrastructure developed at breakneck speed, and wealth was created by those who dared to think and act.

Like NVIDIA in its early days, Crypto AI will be an obvious opportunity in hindsight.

In the first part of this article, I will lay out why Crypto AI is the most exciting underdog opportunity for investors and builders today.

A brief overview is as follows:

Many people still think it is a fantasy.

Crypto AI is still in its early stages and may still be 1-2 years away from peak hype.

This field has at least $230 billion in growth opportunities

Essentially, Crypto. AI is an AI based on crypto infrastructure. This means that it is more likely to follow the exponential growth trajectory of artificial intelligence than the broader crypto market. Therefore, in order not to be left behind, it is necessary to pay attention to the latest artificial intelligence research on Arxiv. , and talk to founders who believe they are building the next amazing products and services.

In the second part of this article, I will take a deep dive into the most exciting things about Crypto AI. Four promising subfields:

Decentralized Computing: Training, Inference, and GPU Markets

Data Networks

Verifiable AI

AI agent running on the chain

To write this article, I spent spent several weeks conducting in-depth research, and Crypto This article is the culmination of conversations with founders and teams in the AI field. This article is not an exhaustive dive into every area. Instead, you can think of it as a high-level roadmap designed to pique your curiosity. Improve your research level and guide your investment thinking

1. Crypto. AI landscape

I picture the decentralized AI stack as an ecosystem with several layers: one end starts with decentralized computing and open data networks to provide support for decentralized AI model training.

A combination of cryptography, cryptoeconomic incentives, and evaluation networks are then used to verify each inference—both input and output. These verified outputs flow to artificial intelligence agents that can run autonomously on the chain, as well as to users Consumer and enterprise AI applications you can truly trust

The coordination network connects everything, enabling seamless communication and collaboration across the entire ecosystem.

In this vision, any Anyone building artificial intelligence can leverage one or more layers of this stack depending on their specific needs, whether leveraging decentralized computing for model training or using evaluation networks to ensure high-quality output, the stack offers it all. A series of choices

Due to the inherent composability of blockchain, I believe we will naturally move towards a modular future. Each layer is becoming highly specialized, with protocols optimized for different functions rather than adopting all-in-one integration Method.

There are a large number of startups at every layer of the decentralized AI stack, most of which have been founded in the past 1-3 years. It is clear that the field is still in its early stages.

The most comprehensive and up-to-date Crypto AI startup map I have seen is maintained by Casey and her team at topology.vc which will be useful to anyone tracking the space. are priceless resources.

As I delve deeper into the Crypto AI subfield, I keep asking myself: How big is the opportunity? I'm not interested in small things—I'm looking for markets that can reach hundreds of billions of dollars.< /p>

(1) Market size

Let's look at market size first. When evaluating a niche, I ask myself: Is it creating an entirely new market or disrupting an existing market?

Take decentralized computing as an example. This is a disruptive category whose potential can be assessed by looking at the existing cloud computing market, which is currently valued at approximately $680 billion and is expected to be Reaching $2.5 trillion

Unprecedented new markets, such as artificial intelligence agents, are harder to quantify. Without historical data, evaluating them requires guesswork and an assessment of the problem they are solving. It is important to note that sometimes , what looks like a new market is actually just an effort to find a solution to a problem.

(2) Timing

Timing is everything. Technology tends to improve and become less expensive over time, But the pace of development varies.

How mature is the technology in a particular segment? Is it ready for mass adoption, or is it still in the research phase, with practical applications still several years away? Timing determines whether an industry deserves immediate attention Still "wait and see"

Take Fully Homomorphic Encryption (FHE) for example: its potential is undeniable, but currently its development is still too slow to be widely used. It may be a few years before we see it become mainstream Adoption. By focusing on areas closer to scale first, I can spend my time and energy on areas where momentum and opportunity are building.

If I were to map these categories onto a scale versus time chart, it would look something like this. Remember, this is still a concept map, not a hard guide. There are a lot of nuances - for example, In verifiable reasoning, different methods (such as zkML and opML) have different levels of readiness for use.

That said, I believe that the scale of artificial intelligence will be so large that even a field that seems "niche" today may evolve into an important market.

It's also worth noting that technological progress doesn't always advance in a straight line - it often comes in spurts. When sudden bursts occur, my view of timing and market size will change. p>

With this framework, let us look at each sub-field in detail.

2. Field 1: Decentralized Computing

< p style="text-align: left;">Decentralized computing is the backbone of decentralized artificial intelligenceGPU market, decentralized training and Decentralized reasoning is closely linked

The supply side usually comes from small and medium-sized data centers and consumer GPUs.

The demand side is small but still growing. Today, it comes from price-sensitive, latency-insensitive users and smaller AI startups.

The biggest challenge currently facing the Web3 GPU market is how to make them run properly.

Coordinating GPUs on a decentralized network requires advanced engineering techniques and a well-designed, reliable network architecture.

2.1 GPU Market/Computing Network

Several Crypto AI teams are building decentralized networks to take advantage of the global potential computing power in response to the GPU shortage that cannot meet demand.

The core value proposition of the GPU market has three aspects:

You can compete with AWS" 90% lower” price access calculations because there are no middlemen and the supply side is open. Essentially, these marketplaces allow you to take advantage of the lowest marginal computing costs in the world.

Greater flexibility: no locked contracts, no KYC process, no waiting time.

Censorship resistance

In order to solve the market supply-side problem, the source of computing power in these markets For:

Enterprise-grade GPUs (such as A100, H100) in small and medium-sized data centers that are hard to find in demand, or Bitcoin miners looking for diversification. I also know of teams working on large funded infrastructure projects where data centers have been built as part of technology growth plans. These GPU providers are often incentivized to keep their GPUT on the network, which helps them offset the amortized cost of the GPU.

Consumer-grade GPUs for millions of gamers and home users who connect their computers to the network in exchange for token rewards.

On the other hand, today's demand for decentralized computing comes from:

Price sensitivity , Delay-insensitive users. This market segment is excellentThink price over speed first. Think of researchers exploring new fields, independent AI developers, and other cost-conscious users who don’t require real-time processing. Due to budget constraints, many of them may not be satisfied with traditional hyperscale servers such as AWS or Azure. Because they are widely distributed among the population, targeted marketing is crucial to attracting this group.

Small AI startups who face the challenge of obtaining flexible, scalable computing resources without signing long-term contracts with major cloud providers. Business development is critical to attracting this segment as they actively seek alternatives to hyperscale lock-in.

Crypto AI startups that build decentralized artificial intelligence products but do not have their own supply of computing power will need to tap the resources of one of the networks.

Cloud gaming: Although not directly driven by AI, cloud gaming is increasingly demanding GPU resources.

The key thing to remember is that developers always prioritize cost and reliability.

The real challenge is demand, not supply.

Startups in this space often point to the size of their GPU supply networks as a sign of success. But this is misleading—it is, at best, a measure of vanity.

The real constraint is not supply, but demand. The key metric to track is not the number of GPUs available, but utilization and the number of GPUs actually rented.

The token does an excellent job of bootstrapping supply, creating the incentives needed to scale quickly. However, they do not essentially solve the demand problem. The real test is getting the product into a good enough state to realize latent demand.

Haseeb Qureshi (Dragonfly) said it well:

Making computing networks actually work

Contrary to popular belief, web3 distributedThe biggest hurdle facing the GPU market right now is getting them to work properly.

This is not a trivial question.

Coordinating GPUs in a distributed network is very complex and has many challenges - resource allocation, dynamic workload scaling, load balancing between nodes and GPUs, Latency management, data transfer, fault tolerance, and handling a variety of hardware dispersed across geographical locations. I could go on.

Achieving this requires thoughtful engineering and a reliable, well-designed network architecture.

To better understand, think of Google's Kubernetes. It is widely considered the gold standard for container orchestration, automating processes such as load balancing and scaling in distributed environments, which are very similar to the challenges faced by distributed GPU networks. Kubernetes itself is built on more than a decade of Google's experience, and even then it took years of relentless iteration to perform well.

Some of the GPU computing markets currently online can handle small-scale workloads, but once they try to scale, problems arise. I suspect this is because their architectural foundations are poorly designed.

Another challenge/opportunity for decentralized computing networks is ensuring trustworthiness: verifying that each node actually provides the computing power claimed. Currently, this relies on network reputation, and in some cases hash power providers are ranked based on reputation scores. Blockchain seems well suited for a trustless verification system. Startups like Gensyn and Spheron are seeking to solve this problem using a trustless approach.

Many web3 teams are still grappling with these challenges today, which means the door of opportunity is wide open.

Decentralized computing market size

How big is the decentralized computing network market?

Today, it may be just a small part of the cloud computing industry worth $680 billion to $2.5 trillion. However, despite the added friction for users, there will always be demand as long as the cost is lower than traditional suppliers.

MeBelieve that costs will remain low in the short to medium term due to token subsidies and supply unlocking for price-insensitive users (e.g. if I can rent out my gaming laptop to earn extra cash, it's either $20 per month Still $50, I'd be happy).

But the real growth potential of decentralized computing networks—and their TAMs Real expansion will occur when:

Decentralized training of artificial intelligence models becomes practical.

The demand for inference has surged, and existing data centers cannot meet the demand. This is already starting to happen. Jensen Huang said that the demand for inference will increase "a billion times."

Appropriate service level agreements (SLAs) become available, solving a key barrier to enterprise adoption. Currently, the operation of decentralized computing allows users to experience different levels of service quality (such as uptime percentage). With SLAs, these networks can provide standardized reliability and performance metrics, making decentralized computing a viable alternative to traditional cloud computing providers.

Decentralized permissionless computing is the basic layer of the decentralized artificial intelligence ecosystem - infrastructure.

Although the GPU supply chain is expanding, I believe we are still at the dawn of the era of human intelligence. The demand for computing will be insatiable.

It is important to note that the inflection point that may trigger a re-rating of all operating GPU markets may come soon.

Other notes:

The pure-play GPU market is crowded with decentralized platforms Fierce competition between web2 AI and emerging cloud services (such as Vast.ai and Lambda).

Small nodes (such as 4x H100) aren't in huge demand because of their limited use, but good luck finding someone who sells large clusters - there's still some demand for them.

A dominant player will provide support for decentralized protocolsAggregate all hash power supplies, or keep hash power dispersed across multiple markets? I prefer the former because consolidation generally leads to improved infrastructure efficiency. But it will take time, and in the meantime, division and confusion continue.

Developers want to focus on application development rather than dealing with deployment and configuration. Markets must abstract away these complexities and make computational access as frictionless as possible.

2.2 Decentralized training

If the expansion law holds true, then training in a single data center The next generation of cutting-edge AI models will one day become impossible.

Training AI models requires transferring large amounts of data between GPUs. Lower data transfer (interconnect) speeds between distributed GPUs are often the biggest obstacle.

Researchers are exploring multiple methods simultaneously and are making breakthroughs (such as Open DiLoCo, DisTrO). These advances will accumulate and accelerate progress in this field.

The future of decentralized training may lie in designing small specialized models for niche applications, rather than cutting-edge, AGI-centric models.

With the shift to models such as OpenAI o1, the demand for inference will soar, creating opportunities for decentralized inference networks.

Imagine this: a massive, world-changing artificial intelligence model, developed not in secret elite labs, but shaped by millions of ordinary people of. Gamers, whose GPUs typically create the theater-like explosions of Call of Duty, are now lending their hardware to something much grander—an open-source, collectively owned artificial intelligence model without a central gatekeeper.

In a future where foundation-scale models are not limited to top AI labs.

But let’s ground this vision in today’s reality. At present, most of the heavyweight artificial intelligence training is still concentrated in centralized data centers, and this may become the norm for some time.

Companies like OpenAI are expanding their massive clusters. Elon Musk) recently announced that xAI is about to build a data center equivalent to 200,000 H100 GPUs.

But it's not just about raw GPU counts. Model FLOPS Utilization (MFU) is a metric proposed by Google in a 2022 PaLM research article that tracks how efficiently the GPU's maximum capacity is used. Surprisingly, MFU usually hovers between 35-40%.

Why so low? According to Moore's Law, GPU performance has suddenly soared in the past few years, but improvements in network, memory, and storage have significantly lagged behind, forming a bottleneck. Therefore, the GPU is often in a throttled state, waiting for data.

Today's artificial intelligence training is still highly focused because of one word - efficiency.

Training large models depends on the following techniques:

Data parallelism: splitting across multiple GPUs Execute operations in parallel across different data sets to speed up the training process.

Model parallelism: distribute parts of the model across multiple GPUs, bypassing memory constraints.

These methods require the GPU to constantly exchange data, making interconnect speed—the rate at which data can travel across computers in the network—becoming critical.

When the cost of training cutting-edge artificial intelligence models exceeds $1 billion, every efficiency improvement matters.

Through high-speed interconnection, centralized data centers can quickly transfer data between GPUs and save significant costs during training time. This is a decentralized setting Incomparable.

Overcoming slow interconnect speeds

If you talk to people working in the field of artificial intelligence , many people will tell you that decentralized training simply doesn’t work.

In a decentralized setting, GPU clusters do not physically coexist, so transferring data between them is much slower and becomes a bottleneck. Training requires the GPU to synchronize and exchange data at every step. The further apart they are, the higher the latency. Higher latency means slower training and higher cost.

It may take several days in a centralized data center, and may extend to two weeks in a decentralized data center, and the cost is also higher. This is simply not feasible.

But that's about to change.

The good news is that there is a surge of research interest in distributed training. Researchers are exploring multiple approaches simultaneously, as evidenced by the large number of studies and published papers. These advances will stack up and merge to accelerate progress in the field.

It's also about testing in production to see how far we can push the boundaries.

Some decentralized training techniques can already handle smaller models in slow interconnected environments. Now, cutting-edge research is driving the application of these methods to large models.

For example, Prime Intellect's open source DiCoLo article shows a practical approach involving GPU "islands" that perform 500 local steps before synchronization, Bandwidth requirements have been reduced by a factor of 500. What started as Google DeepMind's research on small models has expanded to training a 10 billion parameter model in November and is now fully open source.

Nous Research is raising the bar with their DisTrO framework, which uses optimizers to While training a 1.2B parameter model, the communication requirements between GPUs are reduced by a staggering 10,000 times.

And this momentum is still growing. In December, Nous announced pre-training of a 15B parameter model with loss curves (how model error decreases over time) and convergence rates (how fast model performance stabilizes) that match even the typical results of centralized training Even better. Yes, better than centralization.

SWARM Parallelism and DTFMHE are other different methods for training large AI models across different types of devices , even though these devices have different speeds and connection levels.

Manage variousThe availability of such GPU hardware is another challenge, including the memory-constrained consumer GPUs typical of decentralized networks. Techniques like model parallelism (partitioning model layers across devices) can help with this.

The future of decentralized training

The current model size of decentralized training methods is still Far lower than leading-edge models (GPT-4 reportedly has nearly one trillion parameters, 100 times larger than Prime Intellect's 10B model). To achieve true scale, we need breakthroughs in model architecture, better network infrastructure, and smarter task distribution across devices.

We can dream big. Imagine a world where decentralized training aggregates more GPU computing power than even the largest centralized data centers can muster.

Pluralis Research (an elite team specializing in decentralized training that is worth keeping an eye on) believes this is not only possible, but inevitable. While centralized data centers are limited by physical constraints such as space and power availability, decentralized networks can tap into a truly unlimited pool of global resources.

Even NVIDIA’s Jensen Huang admits that asynchronous decentralized training can unlock the true potential of artificial intelligence scaling. Distributed training networks are also more fault-tolerant.

Therefore, in a possible future world, the world's most powerful artificial intelligence models will be trained in a decentralized manner.

This is an exciting prospect, but I'm not entirely convinced yet. We need stronger evidence that decentralized training of the largest models is technically and economically feasible.

I see great promise in this: the best thing about decentralized training may lie in small, specialized open source models designed for target use cases, rather than with Extra-large AGI-powered cutting-edge model competition. Certain architectures, especially non-transformer models, have proven to be well suited for decentralized settings.

There is another piece to the puzzle: tokens. Once decentralized training becomes feasible at scale, tokens can play a key role in incentivizing and rewarding contributors, withguide these networks efficiently.

The road to realizing this vision is still long, but progress is encouraging. As future models will scale beyond the capacity of a single data center, advances in decentralized training will benefit everyone, even large tech companies and top AI research labs.

The future is distributed. When a technology has such broad potential, history shows it always works better and faster than anyone expected.

2.3. Decentralized reasoning

Currently, most of the computing power of artificial intelligence Focus on training large-scale models. There is an ongoing competition among top artificial intelligence labs to see who can develop the best foundational models and ultimately achieve AGI.

But my view is this: In the next few years, this training-focused computing will shift to inference. As AI becomes increasingly integrated into the applications we use every day—from healthcare to entertainment—the amount of computing resources required to support inference will be staggering.

This is not just speculation. Inference-time compute scaling is the latest buzzword in the field of artificial intelligence. OpenAI recently released a preview/mini version of its latest model 01 (codename: Strawberry), is this a big shift? It takes time to think, first ask yourself what steps you should take to answer this question, and then proceed step by step.

This model is designed for more complex tasks that require a lot of planning, such as crossword puzzles, and problems that require deeper reasoning. You'll notice that it's slower, taking more time to generate responses, but the results are more thoughtful and nuanced. It is also much more expensive to run (25 times more expensive than GPT-4).

The shift in focus is clear: the next leap in AI performance will come not just from training larger models, but also from expanding the application of computing during inference.

If you want to know more, some research articles show:

By repeated samplingScaling inferential computation can lead to large improvements across a variety of tasks.

There is also an extended exponential law for reasoning.

Once powerful models are trained, their inference tasks—what the models do—can be offloaded to decentralized computing networks. This makes sense because:

Inference requires far fewer resources than training. After training, the model can be compressed and optimized using techniques such as quantization, pruning, or distillation. They can even be broken down to run on everyday consumer devices. You don't need a high-end GPU to support inference.

This has already happened. Exo Labs has figured out how to run 450B parameter Llama3 models on consumer-grade hardware like MacBooks and Mac Minis. Distribute inference across multiple devices to efficiently and cost-effectively handle large-scale workloads.

Better user experience. Running computation closer to the user reduces latency, which is critical for real-time applications such as gaming, AR or self-driving cars. Every millisecond counts.

Think of decentralized inference as a CDN (content delivery network) for artificial intelligence: decentralized inference leverages local computing power and is delivered in record time Artificial intelligence responds instead of serving the website quickly by connecting to a nearby server. By employing decentralized reasoning, AI applications become more efficient, responsive, and reliable.

The trend is obvious. Apple's new M4 Pro chip competes with Nvidia's RTX 3070 Ti, which until recently was the domain of hardcore gamers. Our hardware is increasingly capable of handling advanced AI workloads.

Crypto's added value

For decentralized reasoning networks to succeed, they must have Compelling financial incentives. Nodes in the network need to be compensated for their computing power contributions. This systemDegrees must ensure that rewards are distributed fairly and efficiently. Geographic diversity is necessary to reduce latency in inference tasks and improve fault tolerance.

What is the best way to build a decentralized network? Crypto.

Tokens provide a powerful mechanism to coordinate the interests of participants, ensuring that everyone is working towards the same goal: expanding the network and improving the token value.

Tokens also accelerate the growth of the network. They help solve the classic chicken-and-egg problem that has hindered the growth of most networks by rewarding early adopters and driving engagement from day one.

The success of Bitcoin and Ethereum proves this - they have gathered the largest pool of computing power on the planet.

Decentralized inference networks will be next. Due to geographic diversity, they reduce latency, increase fault tolerance, and bring AI closer to users. Incentivized by cryptography, they will scale faster and better than traditional networks.

(To be continued, please pay attention)