Text: Lanxi

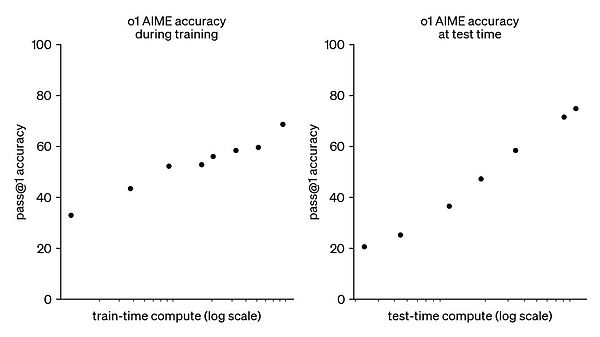

The more than ten days that DeepSeek became popular are actually the most noise section Time, to be honest, most of the finished products have the flavor of overtime rushing to get KPIs. People or ghosts are all quarrels. There are only a handful of people with retention value. However, there are two podcasts that I have benefited a lot after listening to them. recommend. One is Zhang Xiaojun invited Pan Jiayi, PhD, to the AI Laboratory of the University of California, to explain the DeepSeek paper sentence by sentence. It has a high-density output of nearly 3 hours, which is very capable of killing brain cells, but the endorphins secreted after the killing also have explosive content. . Another is Ben Thompson’s 3-episode podcast collection about DeepSeek, which is more than an hour in total. This guy is the pioneer of News Letter and one of the most technical analysts in the world. He has been living in Taipei for many years and is close to/from Asia. The insight is much higher than that of American counterparts. Let’s talk about Zhang Xiaojun’s issue first. After reading DeepSeek’s paper, the guest Pan Jiayi developed the fastest project to reproduce the R1-Zero model in a small scale, which has reached nearly 10,000 Stars on GitHub. This kind of knowledge relay is actually a projection of idealism in the field of technology. Just like Flood Sung, a researcher on the Dark Side of the Moon, said that Kimi's inference model k1.5 was originally obtained based on two videos released by OpenAI. Inspired, earlier, when Google released "Attention Is All You Need", OpenAI immediately realized the future of Transformer, and the liquidity of wisdom is the prerequisite for all progress. That's why everyone was greatly disappointed with the blockade of Anthropic founder Dario Amodei's statement that "science has no borders, but scientists have their motherland." While denying competition, he was also challenging basic common sense. Continue to go back to the podcast content, I will try to draw some key points for you to show it. I recommend that if you have time, listen to the original version:- OpenAI o1 did a very deep hidden work while making a stunning debut, and did not want it to be cracked by other manufacturers. Principle, but from the situation, it seems like I am asking a riddle to the industry. I bet that everyone here did not solve it so quickly. DeepSeek-R1 is the first to find the answer, and the process of finding the answer is quite beautiful; - Open Source It can provide more certainty than closed source, which is very helpful for human resources growth and output. R1 is equivalent to making the entire technology route clear, so it contributes to stimulating scientific research investment. To surpass the O1 of the hidden tricks;- Although the AI industry is getting bigger and bigger in terms of money, in fact, we have not obtained the next generation model for nearly two years, and the mainstream model is still aligning the GPT-4, which is It is rare in a market that advocates "change with each passing day"See, even if you don't investigate whether Scaling Laws hits the wall, OpenAI o1 itself is a new technical line attempt, using language model to let AI learn to think; - o1 re-implements the linear improvement of intelligence level in the benchmark test, this It's awesome. The technical report I sent did not disclose too many details, but the key points were mentioned, such as the value of reinforcement learning, pre-training and supervision fine-tuning are equivalent to providing the model with correct answers for imitation. Over time, the model will learn to follow It’s a good idea to copy it, but reinforcement learning is to let the model complete the task itself. You only tell it whether the result is right or wrong. If it is right, do it more, and if it is not right, do it less;

- OpenAI found that reinforcement learning can make models produce effects close to human thinking, that is, CoT (thinking chain), which will solve When the question steps are wrong, go back to the previous step and try to think of some new methods. These are not taught by human researchers, but the model itself is forced to complete the task. Oh no, it is the ability that emerges. Later, when DeepSeek-R1 also reappears. A similar "moment of enlightenment" was released, and the core fortress of o1 was broken by real threats; - The inference model is essentially a product of economic calculations. If computing power is forcibly accumulated, it may still be possible to force the GPT-6 to attack similar The effect of o1, but that is not a miracle that can be done with great force, but a miracle that can be done with miracles. It is OK but not necessary. The model ability can be understood as training computing power x reasoning computing power. The former is already too expensive, while the latter is still very cheap. The multiplier effect is almost equal, so now the industry is starting to take the reasoning route of better cost-effectiveness; - The release of o3-mini at the end of last month may not have much to do with DeepSeek-R1, but the pricing of o3-mini has dropped to o1 -mini's 1/3 must have been greatly affected. OpenAI believes that ChatGPT's business model has a moat, but it does not sell APIs, which is too substitutable. Recently, there are also some questions about whether ChatBot is a good business. The controversy, even DeepSeek obviously did not understand how to undertake this wave of traffic. There may be natural conflicts in consumer markets and cutting-edge research; - In the view of technical experts, DeepSeek-R1-Zero is more than R1 More beautiful, because the components of manual intervention are lower, it is purely because the model itself has figured out the process of finding the optimal solution in thousands of steps in reasoning, and its dependence on prior knowledge is not that high, but because of no alignment processing, R1 -Zero is basically impossible to deliver to users, for example, it will have output in various languages, so in fact, the R1, which DeepSeek has been recognized in the mass market, still uses old methods such as distillation, fine-tuning, and even pre-implantation of thinking chains;- This involves a problem of ability and performance not synchronized, and the ability is the bestThe model may not be the best performer, and vice versa. R1 performs well in large part because of the direction of manual effort. R1 is not exclusive in the training corpus. Everyone's corpus contains classical poetry, and R1 does not exist. Know more. The real reason may be the data annotation. It is said that DeepSeek has found students from Peking University’s Chinese Department to mark it. This will significantly improve the reward function for literary expression. Generally, the industry will not like to use liberal arts students too much, including Liang Wenfeng. The statement that I sometimes do marking is not just about his enthusiasm, but about the marking project that requires professional testers to tutor AI. OpenAI also pays $100-200 to ask doctoral students to do it for O1. Annotation; - Data, computing power, and algorithms are the three flywheels of the big model industry. The main breakthrough in this wave comes from algorithms. DeepSeek-R1 discovered a misunderstanding, that is, the emphasis on value functions in traditional algorithms may be a trap, value functions Inclined to make judgments on every step of the reasoning process, so as to guide the model to the right path in detail. For example, when the model answers 1+1 equals a few, it produces the illusion of 1+1=3. , start punishing it, a bit like electric shock therapy, not allowing it to make mistakes; - This algorithm is theoretically fine, but it is also very perfectionistic. Not every question is as simple as 1+1, especially in the long thinking chain When thousands of Token sequences are often reasoned, every step must be supervised, and the input-output ratio will become very low. Therefore, DeepSeek made a decision that violated the ancestral teachings and no longer used the value function to satisfy the research. Obsessive-compulsive disorder, only score the answers, let the model solve the answers by itself, and use the correct steps to get the answer. Even if it has a 1+1=3 solution idea, it will not overcorrect it. Instead, it will be aware of it in the reasoning process. When something is wrong, I found that if I continue to calculate this, I can't get the correct answer, and then I correct myself; - Algorithm is the biggest innovation of DeepSeek in the entire industry, including how to tell whether the model is imitating or reasoning. I remember that many people have come out of o1 It is claimed that general models can also output thinking chains through prompt words, but those models have no reasoning ability, and are actually imitating. It still gives the answer according to the normal scale, but because it needs to meet the user's requirements, it goes back and gives it based on the answer. The idea, this is imitation, it is a meaningless action of shooting arrows first and then drawing the target. DeepSeek has also made a lot of efforts in combating model cracking rewards, mainly aiming at the problem of the model becoming a thief. It gradually guessed how to think about it and get it. Reward, but I don’t really understand why I think so; - In recent years, the industry has been expecting the birth and emergence of models. In the past, I thought that if the amount of knowledge is enough, the model can naturally evolve wisdom, but after O1, I found that reasoning seems to be It is the most critical springboard. DeepSeek emphasized in his paper what behaviors R1-Zero emerge independently rather than artificially commanded, such as when it realizes that it can only think about it if it realizes that it generates more tokens.When it is more perfect and ultimately improves its performance, it begins to actively make the thinking chain longer and longer. This is an instinct in the human world - long tests are of course more strategic than fast chess - but let the model get it It is very surprising to have such experience; - DeepSeek-R1's training cost may be between 100,000 and 1 million US dollars, which is less than the 6 million US dollars of V3. After opening the source, DeepSeek also demonstrated the use of R1 to distillate The results of other models, and the continued reinforcement learning after distillation, can be said that the open source community's support for DeepSeek is not without reason. It has turned the tickets to AGI from luxury goods to fast-moving consumer goods, allowing more people to Come in and try it; - Kimi k1.5 was released at the same time as DeepSeek-R1, but because it has no open source and insufficient international accumulation, although it has contributed similar algorithm innovations, its influence is quite limited. In addition, Kimi is Influenced by the 2C business, the method of using short thinking chains to achieve a long thinking chain is more prominent, so it will reward k1.5 with shorter reasoning. Although this original intention is to cater to users - I don’t want people to wait too much after asking questions. Long time - but it seems that something is against the backlash. Many of the materials that are out of the circle of DeepSeek-R1 are highlights in the thinking chain and are discovered and spread by users. For those who have been exposed to the inference model for the first time, they don’t seem to mind the verbose model. Efficiency; - Data annotation is a point hidden in the entire industry, but this is just a transitional solution. Self-learning roadmap like R1-Zero is the ideal. At present, OpenAI's moat is still very deep. Last month, its web traffic reached its highest ever. DeepSeek's popularity will objectively attract new products for the entire industry, but Meta will be more uncomfortable. LLaMa 3 actually does not have the innovation of the architecture layer, and it is not expected that DeepSeek will be open source. Due to the impact of the market, Meta's talent reserve is very strong, but the organizational structure has not converted these resources into technical achievements. Let’s talk about Ben Thompson’s podcast. He cross-verified Pan Jiayi’s judgment in many places. For example, R1-Zero removed the technical highlights of HF (human feedback) in RLHF, but more discussions are placed on geographic competition and large factories. Past events, narrative viewing is very smooth:- One of the motivations of Silicon Valley to pay too much attention to AI security is to rationalize closed behavior. As early as the GPT-2 protocol, it was used to avoid the use of large language models to generate "deceptiveness" , with biased content, but "deceptive, biased" is far from the risk of human extinction level. This is essentially a continuation of cultural wars, and based on the assumption that "the granaries are full and the etiquette is known", that is, the United States Technology companies have absolute technological advantages, so we are qualified to be distracted to discuss whether AI has racism; - Just like OpenAI's decision to hide the O1 thinking chain, it is justified - the original thinking chain may not be aligned The phenomenon of users seeIt may feel offended, so we decided to do one-size-fits-all and not show it to users—but DeepSeek-R1 proved the above mystery. Yes, in the AI industry, Silicon Valley does not have such a solid leading position. Yes, exposed thinking chains can become part of the user experience, making people trust the model's thinking ability more after watching it; - The former CEO of Reddit believes that DeepSeek is described as the Sputnik moment - the Soviet Union launched the first place before the United States An artificial satellite - is a forced interpretation. He is also sure that DeepSeek was located in the Google moment in 2004. In that year, Google showed the world in its prospectus how distributed algorithms connect computer networks together. , and achieved the optimal solution to price and performance, which is different from all tech companies at that time. They just bought increasingly expensive hosts and were willing to be at the most expensive front-end of the cost curve; - DeepSeek open source R1 model and Transparently explain how it does this, which is a huge goodwill. If the company follows the way of continuing to incite geographic conditions, the company should have kept its achievements confidential, and Google is always making professional servers like Sun. The merchandise set the finish line to push competition to the commodity layer; - OpenAI researcher Roon believes that DeepSeek has made a downgrade optimization to overcome the H800 chip - engineers cannot use Nvidia's CUDA, so they can only choose lower-end PTX - Yes The wrong demonstration, because it means that the time they waste on this cannot be compensated, and American engineers can apply for the H100 without any worries, weakening the hardware cannot lead to real innovation;

- If Google in 2004 listened to Roon's advice, don't "waste" valuable researchers to build more economical data centers Then perhaps American Internet companies are renting Alibaba's cloud servers today. In the past twenty years of wealth influx, Silicon Valley has lost the driving force to optimize infrastructure, and large and small factories have become accustomed to capital-intensive. Production model, willing to submit budget forms in exchange for investment, and even turn Nvidia's chips into collateral. As for how to deliver as much value as possible in limited resources, no one cares; - AI companies will certainly support Jevins In other words, cheaper computing creates more use, but the actual behavior over the past few years has been inconsistent, as every company has shown a preference for research over cost until DeepSeek takes Jevins The paradox is truly brought to everyone's nose; - Nvidia's company becomes more valuable, and Nvidia's stock price becomes more risky, which can be developed at the same time, if DeepSeek can achieve this on highly restricted chips Achievement,Then imagine how much technological progress would be if they obtained full power computing resources, which is an incentive for the entire industry, but Nvidia's stock price is based on the assumption that it is the only supplier, which may Will be falsified; - There is an obvious difference with American technology companies on the value judgment of AI products. This believes that differentiation lies in achieving a better cost structure, which is in line with its achievements in other industries. This is believed that differentiation comes from the product itself and the higher profit margins created based on this differentiation, but the United States needs to reflect on the mentality of winning competition by denying innovation—such as restricting companies from obtaining chips needed for AI research;- Claude No matter how good the reputation is in San Francisco, it is difficult to change its natural weakness in the sales API model, that is, it is too easy to be replaced, and ChatGPT allows OpenAI as a consumer technology company to have greater risk resistance. However, in the long run, DeepSeek will benefit both those who sell AI and those who use AI, and we should thank this generous gift. Well, that's about it. I hope this assignment can help you better understand the true meaning of DeepSeek to the AI industry after it became popular.