Introduction

In the digital era, artificial intelligence (AI) has become the key to promoting technological innovation and social progress strength. The development of AI is not only a technological advancement, but also an extension of human wisdom. AI has been the hottest topic in the venture capital industry and capital markets in the past period of time.

With the development of blockchain technology, decentralized AI (Decentralized AI) has emerged. This article will explain to you the definition and definition of decentralized AI. Architecture and how it synergizes with the AI industry.

The definition and architecture of decentralized AI

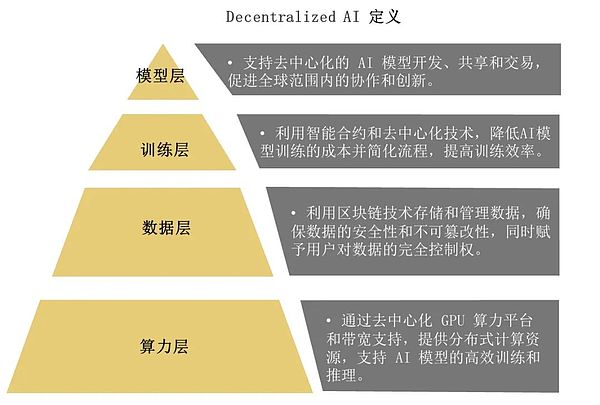

Decentralized AI utilizes decentralized computing resources and data storage to train and use AI models in a distributed manner, enhancing privacy and security. Its four-layer main architecture includes:

•Model layer: supports decentralized AI model development, sharing and trading, and promotes collaboration and innovation on a global scale. Representative projects at this level include Bittensor, which uses blockchain technology to create a global AI model sharing and collaboration platform.

•Training layer: Use smart contracts and decentralized technology to reduce the cost of AI model training, simplify the process, and improve training efficiency. The challenge at this level is how to effectively utilize distributed computing resources for efficient model training.

•Data layer: Use blockchain technology to store and manage data, ensuring data security and non-tamperability, while giving users complete control over the data . Applications at this level include decentralized data markets, which enable transparent transactions and ownership confirmation of data through blockchain technology.

•Computing power layer: Through decentralized GPU computing power platform and bandwidth support, distributed computing resources are provided to support efficient training and inference of AI models. Technological advances at this level, such as edge computing and distributed GPU networks, provide new solutions for the training and inference of AI models.

Decentralized AI representative project

Decentralized AI industry review: model layerModel layer: The number of large model parameters increases exponentially, and the model performance Significant improvement, but the benefits of further scaling the model are gradually diminishing. This trend requires us to rethink the development direction of AI models and how to reduce costs and resource consumption while maintaining performance.

The development of large AI models follows the "law of scale", that is, model performance and parameter scale , there is a certain relationship between the size of the data set and the amount of calculation.

When a model scales to a certain size, its performance on a specific task suddenly Significant improvement. As the number of large model parameters increases, the improvement in model performance gradually decreases. How to balance parameter scale and model performance will be the key to future development.

We have seen that API price competition for large AI models has intensified, and many manufacturers have Lower prices to increase market share. However, with the homogenization of large model performance, the sustainability of API revenue is also facing doubts. How to maintain high user stickiness and increase revenue will be a major challenge in the future.

End The application of side models will be achieved by reducing data precision and adopting a hybrid model of experts (MoE) architecture. Model quantization technology can compress 32-bit floating point data into 8 bits, significantly reducing model size and memory consumption. In this way, models can run efficiently on end-side devices, promoting the further popularization of AI technology.

Summary: Blockchain helps the model layer improve the transparency, collaboration and User engagement.

Centralized AI industry review: training layer

TrainingLayer: Large model training requires high-bandwidth and low-latency communication, and it is possible to conduct large-scale model attempts on a decentralized computing power network. The challenge at this level is how to optimize the allocation of communication and computing resources to achieve more efficient model training.

Decentralized computing power network has certain potential in large model training. Despite the challenge of excessive communication overhead, training efficiency can be significantly improved by optimizing the scheduling algorithm and compressing the amount of transmitted data. However, how to overcome network latency and data transmission bottlenecks in actual environments is still the main problem facing decentralized training.

In order to solve the bottleneck of large model training in decentralized computing power networks, we can Technologies such as data compression, scheduling optimization, and local update and synchronization are adopted. These methods can reduce communication overhead and improve training efficiency, making decentralized computing networks a viable option in large model training.

Zero-knowledge machine learning (zkML) combines zero-knowledge proof and machine learning technology. Allows model validation and inference without exposing training data and model details. This technology is particularly suitable for industries with high data confidentiality requirements, such as medical and finance, and can ensure data privacy while verifying the accuracy and reliability of AI models.

Decentralized AI industry review: data layer

Data privacy and security have become key issues in the development of AI. Decentralized data storage and processing technology provides new ideas for solving these problems.

Data storage, data indexing and data application are all key links to ensure the normal operation of the decentralized AI system. Decentralized storage platforms such as Filecoin and Arweave provide new solutions in data security and privacy protection, and reduce storage costs.

Go Centralized storage case:

Arweave’s data storage size since 2020 Rapid growth, mainly driven by the NFT market and Web3 application demand. Through Arweave, users can achieve decentralized permanent data storage and solve the problem of long-term data storage.

The AO project further enhances the Arweave ecosystem, providing users with more powerful computing power and a wider range of application scenarios.

In this page, we compare two decentralized storage projects, Arweave and Filecoin. Arweave achieves permanent storage through a one-time payment, while Filecoin adopts a monthly payment model and focuses on providing flexible storage services. Both have their own advantages in technical architecture, business scale and market positioning. Users can choose a suitable solution according to their specific needs.

Decentralized AI industry review: Computing power layer

Computing power layer: As the complexity of AI models increases, the demand for computing resources is also growing. The emergence of decentralized computing power networks provides new resource allocation methods for AI model training and inference. .

Decentralized computing networks (as well as specialized computing networks for training and inference) are currently observed in AI The most active and fastest-growing field on the track. This is consistent with the fact that real-world infrastructure providers have captured the rich fruits of the AI industry chain. As the shortage of computing resources such as GPUs continues, there is a demand for computing resource hardware equipment. Manufacturers are entering this field one after another.

Aethir case:

Business model: two-sided market for computing power leasing

The decentralized computing power market essentially uses Web3 technology to extend the concept of grid computing into an environment with economic incentives and no trust. By incentivizing resource providers such as CPU and GPU to contribute idle computing power to the decentralized network, a decentralized computing power service market of a certain scale will be formed; and the demand side of computing power resources (such as model providers) will be connected. , providing computing power service resources at a lower cost and in a more flexible way. The decentralized computing power market is also a challenge to centralized monopoly cloud service providers.

The decentralized computing power market can be further divided according to the type of its services: general-purpose and special-purpose. A general computing network operates like a decentralized cloud, providing computing resources to a variety of applications. Dedicated computing networks are primarily purpose-built computing networks, tailored for specific use cases. For example, Render Network is a specialized compute network focused on rendering workloads; Gensyn is a specialized compute network focused on ML model training; and io.net is an example of a general-purpose compute network.

For DeAI, an important challenge in training models on decentralized infrastructure is large-scale computing power, bandwidth limitations and the use of resources from different suppliers around the world The high latency caused by the vendor's heterogeneous hardware. Therefore, a dedicated AI computing network can provide functions more suitable for AI than a general-purpose computing network. At present, centralized training of ML models is still the most efficient and stable project, but this puts a very high requirement on the capital strength of the project party.

Conclusion

Decentralized AI as an emerging The technology trend is gradually showing its advantages in data privacy, security and cost-effectiveness. In the next article, we will explore the risks and challenges faced by decentralized AI, as well as future development directions.