Source: Tencent Technology

Since the Spring Festival, the popularity of DeepSeek has continued to rise, and there are many misunderstandings and controversies that come with it. Some people say it is "the light of domestic products that beat OpenAI", and there are also many misunderstandings and controversies. People say it is "just a clever trick to copy foreign big model homework."

These misunderstandings and controversies are mainly concentrated in five aspects:

1. Excessive myth and brainless belittlement. Is DeepSeek an underlying innovation? Is there any basis for the so-called distillation ChatGPT?

2. Is the cost of DeepSeek really only $5.5 million?

3. If DeepSeek can really be so efficient, will the huge AI capital expenditures of major global giants be wasted?

4. Does DeepSeek adopt PTX programming, and can it really bypass the dependence on Nvidia CUDA?

5. DeepSeek is so popular all over the world, but due to compliance, geopolitical issues, it will be banned abroad one after another?

1. Is excessive myth and brainless belittlement of DeepSeek an underlying innovation?Internet practitioner caoz believes that its promotion value for industry development is worthy of recognition, but it is too early to talk about subversion. According to some professional evaluations, it has not surpassed ChatGPT in solving some key problems.

For example, someone tests to simulate the bounce code of a typical ball in a closed space. The performance of the program written by DeepSeek, compared with ChatGPT o3-mini, from the perspective of physics compliance, there are still The gap.

Don't over-myth it, but don't belittle it brainlessly.

About DeepSeek's technological achievements, there are currently two extreme views: one calls its technological breakthrough "subversive revolution"; the other believes that this is nothing more than an imitation of foreign models. There is even speculation that it is progressing through distillation of the OpenAI model.

Microsoft said that DeepSeek distilled the results of ChatGPT, so some people also took advantage of the issue and devalued DeepSeek.

In fact, both of these views are too one-sided.

To be more precise, DeepSeek's breakthrough is an upgrade of the engineering paradigm for industrial pain points, opening up a new path for AI reasoning "less is more".

It mainly makes three levels of innovation:

First, it loses weight through training architectures—for example, the GRPO algorithm eliminates the necessary Critic models in traditional reinforcement learning (i.e. "Dual Engines" "Design), simplifying complex algorithms into implementable engineering solutions;

Secondly, simple evaluation standards are adopted, typical examples are directly used in code generation scenariosReplace manual scoring with compilation results and unit test pass rate. This deterministic rule system effectively solves the subjective deviation problem in AI training;

Finally, finds a subtle balance point in data strategy, and passes purely The combination of the Zero mode of algorithmic evolution and the R1 mode that only requires thousands of manually labeled data, both retaining the model's independent evolution ability and ensuring human interpretability.

However, these improvements did not break through the theoretical boundaries of deep learning, nor did they completely subvert the technical paradigm of top models such as OpenAI o1/o3, but instead solved the pain points of the industry through system-level optimization.

DeepSeek is fully open source and records these innovations in detail, and the world can use these advances to improve its own AI model training. These innovations can be seen in open source files.

Tanishq Mathew Abraham, former head of research at Stability AI, also emphasized three innovations in DeepSeek:

1. Multi-head Attention Mechanism: Large Language Models are usually based on Transformer architecture, using the so-called multi-head attention (MHA) mechanism. The DeepSeek team has developed a variant of the MHA mechanism that can utilize memory more efficiently and achieve better performance.

2. GRPO with verifiable rewards: DeepSeek proves that a very simple reinforcement learning (RL) process can actually achieve GPT-4-like effects. More importantly, they developed a variant of the PPO reinforcement learning algorithm called GRPO, which is more efficient and performs better.

3. DualPipe: When training AI models in multi-GPU environments, many efficiency-related factors need to be considered. The DeepSeek team has designed a new approach called DualPipe, which is significantly more efficient and fast.

The traditional sense of "distillation" refers to training token probability (logits), and ChatGPT does not open such data, so it is basically impossible to "distillate" ChatGPT.

So, from a technical point of view, DeepSeek's achievements should not be questioned. Since the reasoning process of OpenAI o1 related thinking chains has never been made public, it is simply difficult to achieve this result by relying solely on "distillation" ChatGPT.

caoz believes that in DeepSeek's training, some distilled corpus information may be used in part or a little distillation verification, but this should have a low impact on the quality and value of its entire model.

In addition, optimizing your own model based on distillation verification is a routine operation of many large model teams, but after all, it requires an Internet API, and the information you can obtain is very limited and unlikely.It is the decisive influencing factor. Compared with massive Internet data information, the corpus that can be obtained by calling leading big models through APIs is dropped. A reasonable guess is more used for verification and analysis of strategies than directly used for large-scale training. .

All large models need to obtain corpus training from the Internet, and leading large models are constantly contributing corpus to the Internet. From this perspective, every leading large model cannot get rid of being collected and The fate of distillation, but in fact there is no need to regard this as the key to success or failure.

In the end, everyone has me in you and I have you in you, and iteratively move forward.

2. The cost of DeepSeek is only US$5.5 million?$5.5 million in cost, this conclusion is both correct and wrong, because it is not clear what the cost is.

Tanishq Mathew Abraham objectively estimates the cost of DeepSeek:

First of all, it is necessary to understand where this number comes from. This number first appeared in the paper of DeepSeek-V3, which was published a month earlier than the paper of DeepSeek-R1;

DeepSeek-V3 is the basic model of DeepSeek-R1, which means DeepSeek-R1 In fact, additional reinforcement learning training was carried out based on DeepSeek-V3.

So, in a sense, this cost data is not accurate enough in itself because it does not count into the additional cost of reinforcement learning training. But this additional cost may be only a few hundred thousand dollars.

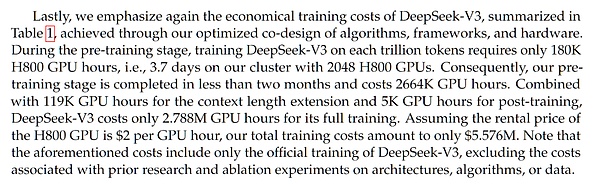

Picture: Discussion on cost in DeepSeek-V3 paper

< p>So, is the $5.5 million claimed in the DeepSeek-V3 paper accurate?Similar estimation results were obtained based on multiple analyses based on GPU cost, data set size and model size. It is worth noting that while DeepSeek V3/R1 is a model with 671 billion parameters, it adopts a mixture-of-experts architecture, which means it will only be used when any function calls or forward propagation. About 37 billion parameters, this value is the basis for training cost calculation.

It should be noted that DeepSeek reports costs based on current market prices. We don't know how much their 2048 H800 GPU cluster (note: not H100, which is a common misunderstanding) actually cost. Normally, buying a GPU cluster in a whole batch will be cheaper than buying it in a scattered manner, so the actual cost may be lower.

But the key is that this is just the cost of the final training run. In reachingBefore final training, there were many small-scale experiments and ablation studies that would incur considerable costs, which were not reflected in this report.

In addition, there are many other costs, such as the salary of researchers. According to SemiAnalysis, DeepSeek researchers are reported to have a salary of up to $1 million. This is comparable to the high-end salary levels of AGI cutting-edge labs such as OpenAI or Anthropic.

Some people deny DeepSeek's low cost and its operational efficiency because of the existence of these additional costs. This statement is extremely unfair. Because other AI companies also spend a lot of money on personnel, this is usually not calculated into the cost of the model. ”

Semianlysis, an independent research and analysis company focusing on semiconductors and artificial intelligence, also gave DeepSeek’s AI TCO (total cost in the field of artificial intelligence). This table summarizes DeepSeek AI in The total cost when using four different models of GPUs (A100, H20, H800 and H100) includes the costs of buying equipment, building servers and operating the cost. Based on a four-year cycle, the total cost of these 60,000 GPUs is $2.573 billion. , which mainly includes the cost of buying servers ($1.629 billion) and the cost of operating ($944 million).

Of course, the outside world No one knows exactly how many cards DeepSeek has and how much each model accounts for. Everything is just an estimate.

In summary, if all the costs of equipment, servers, operations, etc. are calculated The cost is definitely far exceeding US$5.5 million, but the net computing power cost of US$5.5 million is already very efficient.

3. Huge capital expenditure investment computing power is just a huge waste?This is a widely used one A circulating but quite one-sided view. Indeed, DeepSeek has shown advantages in training efficiency, and has also exposed that some leading AI companies may have efficiency problems in the use of computing resources. Even Nvidia's short-term plunge may also be related to this misread It's widely circulated.

But that doesn't mean that having more computing resources is a bad thing. From the perspective of Scaling Laws, more computing power always means better Performance. This trend has continued since the launch of Transformer architecture in 2017, and DeepSeek's model is also based on Transformer architecture.

Although the focus of AI development is constantly evolving - from the initial model scale , to the dataset size, and to the current inference calculation and synthesis of data, but the core law of "more calculations equal to better performance" has not changed.

Although Deep Seek has found a more efficient path and the law of scale is still valid, more computing resources can still achieve better results.

IV. Does DeepSeek use PTX to bypass its dependence on NVIDIA CUDA?DeepSeek's paper mentions that DeepSeek uses PTX (Parallel Thread Execution) programming. Through such a customized PTX optimization, DeepSeek's system and model can better release the performance of the underlying hardware.

The original text of the paper is as follows:

"we employed customized PTX (Parallel Thread Execution) instructions and auto-tune the communication chunk size, which significant y reduces the use of the L2 cache and the interference to other SMs. "We use customized PTX (parallel thread execution) instructions and automatically adjust the communication block size, which greatly reduces the use of L2 cache and interference to other SMs."

This section Content, there are two interpretations circulating on the Internet. One voice believes that this is to "bypass CUDA monopoly"; the other voice is that because DeepSeek cannot obtain the highest-end chip, in order to solve the problem of the limited interconnection bandwidth of the H800 GPU. We have to sink to a lower level to improve cross-chip communication capabilities.

Dai Guohao, associate professor at Shanghai Jiaotong University, believes that neither of these statements are accurate. First, the PTX (parallel thread execution) instruction is actually a component located inside the CUDA driver layer, which still relies on the CUDA ecosystem. Therefore, the statement that using PTX to bypass CUDA's monopoly is wrong.

Professor Dai Guohao used a PPT to clearly explain the relationship between PTX and CUDA:

Produced by Dai Guohao, associate professor at Shanghai Jiaotong University, Shanghai Jiaotong University

CUDA is a relatively higher-level interface that provides a series of programming interfaces for users. PTX is generally hidden in CUDA drivers, so almost all deep learning or big model algorithm engineers will not touch this layer.

Then why is this layer very important? The reason is that we can see that from this position, PTX directly interacts with the underlying hardware, which can achieve better programming and calling the underlying hardware.

In simple terms, the optimization solution of DeepSeek is not a last resort under the actual conditions of chip limitation, but is an active optimization. Regardless of whether the chip uses H800 or H100, this method can improve communications. Internet efficiency.

5. Will DeepSeek be banned abroad?After DeepSeek became popular, the five major cloud giants Nvidia, Microsoft, Intel, AMD, and AWS have all been launched or integrated with DeepSeek. From a glance, Huawei, Tencent, Baidu, Alibaba, and Volcano Engines have also supported the deployment of DeepSeek.

However, there are some overly emotional remarks on the Internet. On the one hand, foreign cloud giants have listed DeepSeek, "foreigners were defeated."

In fact, these companies' deployment of DeepSeek is more due to commercial considerations. As a cloud vendor, it can support and deploy as much as possible with the most popular and capable models, which can provide better services to customers. At the same time, it can also take advantage of a wave of traffic related to DeepSeek, which may also bring some of the traffic. New user conversion.

It is true that centralized deployment is in full swing when DeepSeek is very popular, but the statement that DeepSeek is too exaggerated.

What's more, it was fabricated that after DeepSeek was attacked, the technology circle formed the Avengers to jointly support DeepSeek.

On the other hand, there are voices saying that due to geopolitical reasons and other practical reasons, DeepSeek will be banned from use soon.

Caoz gave a clearer interpretation of this: In fact, what we are talking about DeepSeek actually includes two products, one is DeepSeek, the world-famous App, and the other is an open source on github. Codebase. The former can be considered a demo of the latter, a complete display of capabilities. And the latter may grow into a vigorous open source ecosystem.

The restricted use is DeepSeek's App, and the access and provision of the giants is the deployment of DeepSeek's open source software. These are completely two things.

DeepSeek entered the global AI arena as a "big model" and adopted the most powerful open source protocol - MIT License, which is even commercially available. The current discussion on it has far exceeded the scope of technological innovation, but technological progress has never been a black-and-white dispute between right and wrong. Rather than falling into over-tout or total denial, let time and market test its true value. After all, in the AI marathon, the real competition has just begun.

Reference:

"Some common misreadings about deepseek" Author: caoz

https://mp.weixin.qq.com/s/Uc4mo5U9CxVuZ0AaaNNi5g"DeepSeek's strongest professional disassembly Here, Professor Qing Jiaofu's super hardcore interpretation》 Author: ZeR0

https://mp.weixin.qq.com/s/LsMOIgQinPZBnsga0imcvA

Debunking DeepSeek DelusionsAuthor: Tanishq Mathew Abraham, former research director at Stability AI

https://www.tanishq.ai/blog/posts/deepseek-delusions.html

< /p>