Article author: Mario Gabriele Article compilation: Block unicorn

Artificial Intelligence Jihad

I would rather live my life as if there is God and wait until death to find out that God does not exist than live as if there is no God and wait until death to find out that God is exist. ——Blaise Pascal

is an interesting thing. Maybe because it's completely unprovable in any direction, or maybe it's like my favorite saying: "You can't fight facts against feelings."

The characteristic thing about beliefs is that in their ascent they accelerate at such an incredible rate that it is almost impossible to doubt the existence of God. How can you doubt a divine being when everyone around you increasingly believes in it? When the world rearranges itself around a doctrine, where is the place for heresy? When temples and cathedrals, laws and norms are ordered according to a new, unshakeable gospel, where is the room for opposition?

When Abraham first appeared and spread across continents, or when Buddhism spread from India across Asia, the immense momentum of faith created a self-reinforcing cycle. As more people converted, and complex theologies and rituals were built around these beliefs, it became increasingly difficult to question these basic premises. It is not easy to be a heretic in a sea of gullibility. Magnificent churches, intricate scriptures, and thriving monasteries all serve as physical evidence of the divine presence.

But the history of China also tells us how easily such a structure can collapse. As Christianity spread into Scandinavia, the ancient Norse faith collapsed in just a few generations. The ancient Egyptian system lasted for thousands of years, eventually disappearing as new, more enduring beliefs arose and larger power structures emerged. Even within the same church, we see dramatic schisms—the Reformation tore Western Christianity apart, and the Great Schism that split the Eastern and Western churches. These schisms often begin with seemingly trivial doctrinal differences and evolve into entirely different belief systems.

Sacred Scripture

God is a metaphor that transcends all levels of intellectual thought. It's that simple. ——Joseph Campbell

Simply put, believing in God is . Maybe creating God is no different.

Since its inception, optimistic AI researchers have imagined their work as creationist—that is, God’s creation. The explosive development of large language models (LLMs) over the past few years has further strengthened believers’ belief that we are on a divine path.

It also confirms a blog post written in 2019. Although it was unknown to people outside the field of artificial intelligence until recently, Canadian computer scientist Richard Sutton's "Bitter Lessons" has become an increasingly important text in the community, evolving from obscure knowledge into a new , all-encompassing foundation.

In 1,113 words (each requiring a sacred number), Sutton summarizes a technical observation: "From 70 years of artificial intelligence research The biggest lesson that can be learned is that leveraging a general approach to computing is ultimately the most efficient and a huge advantage. “Advances in AI models have been driven by exponential increases in computing resources, riding the massive wave of Moore’s Law. At the same time, Sutton noted, much of the work in AI research focuses on optimizing performance through specialized techniques—either augmenting human knowledge or narrow tools. While these optimizations may help in the short term, Sutton sees them as ultimately a waste of time and resources, like adjusting a surfboard's fins or trying a new wax when a big wave hits.

This is the basis of what we call "bitterness". It has only one commandment, often referred to in the community as the "Law of Scaling": Exponential growth in computation drives performance; the rest is foolishness.

Bitterness has expanded from large language models (LLMs) to world models and is now being addressed through biology, chemistry, and embodied intelligence (robotics and autonomous vehicles). Unconverted temples spread rapidly.

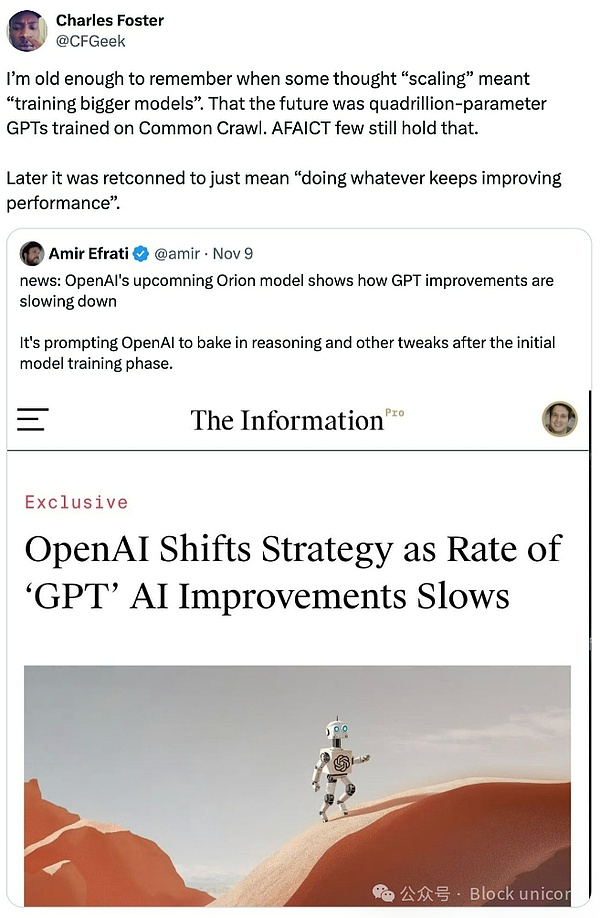

However, as the Sarton doctrine spread, the definition began to change. This is the hallmark of all that is active and vital—argument, extension, commentary. The "Law of Scaling" no longer just means scaling computing (the Ark is more than just a ship), it now refers to a variety of methods designed to improve transformer and computing performance, with a few tricks thrown in as well.

Now, Classics encompasses attempts to optimize every part of the AI stack, from the techniques applied to the core models themselves (merging models, mixtures of experts (MoE), and knowledge distillation) all the way to generating synthetic data to feed these forever The Hungry God, who also conducted numerous experiments

Warring sects

Recently, a question that has been stirred up in the artificial intelligence community, with the flavor of jihad, is whether "bitter" is still correct.

The conflict kicked off this week with the publication of a new paper from Harvard, Stanford, and MIT called "Expanding Laws of Precision." The paper discusses the end of efficiency gains in quantization techniques, Quantization is a set of techniques that improves the performance of artificial intelligence models and greatly benefits the open source ecosystem. Tim Dettmers, a research scientist at the Allen Institute for Artificial Intelligence, outlines its importance in the post below, calling it “a long way to go.” The most important paper of all time." It represents a continuation of a conversation that has been heating up over the past few weeks and reveals a noteworthy trend: two

OpenAI CEO Sam Altman and Anthropic CEO Dario Amodei. Belong to the same sect. Both men confidently stated that we will achieve artificial general intelligence (AGI) in about 2-3 years. Both Altman and Amodei can be said to be most dependent on "bitterness." "Two figures of divinity. All their incentives tend to overpromise, to create maximum hype, in order to accumulate capital in a game dominated almost entirely by economies of scale. If the law of scaling is not "Alpha vs. Omega," First and last, beginning and end, so what do you need $22 billion for?

Former OpenAI chief scientist Ilya Sutskever Adhere to a different set of principles. He joins other researchers (including many from within OpenAI, based on recent leaks) who believe that scaling is approaching a ceiling to sustain progress and bring AGI into the real world. , which will inevitably require new science and research.

The Sutskever faction rightly argued that the Altman faction's idea of continued expansion was not economically feasible. As AI researcher Noam Brown asks: “After all, do we really want to train models that cost hundreds of billions or trillions of dollars?” Billions of dollars spent on inferential computing.But true believers are intimately familiar with their opponents’ arguments. Your doorstep missionary can handle your hedonistic trilemma with ease. For Brown and Sutskever, the Sutskever faction pointed to the possibility of extending "test-time calculations". Unlike what has been the case so far, Compute on Test does not rely on larger computations to improve training, but instead devotes more resources to execution. When an AI model needs to answer your questions or generate a piece of code or text, it can provide more time and computation. It's the equivalent of shifting your focus from studying for a math test to convincing your teacher to give you an extra hour and allow you to bring a calculator. For many in the ecosystem, this is a “bitter” new frontier, as teams are moving from orthodox pre-training to a post-training/inference approach.

It is easy to point out flaws in other belief systems and criticize other teachings without revealing your own position. So, what is my own belief? First, I believe the current batch of models will deliver a very high return on investment over time. As people learn how to work around limitations and leverage existing APIs, we will see truly innovative product experiences emerge and succeed. We will move beyond the skeuomorphism and incremental stages of AI products. We should not think of it as “artificial general intelligence” (AGI), as that definition is framed by flaws, but as “minimum viable intelligence” that can be customized for different products and use cases.

As for realizing super artificial intelligence (ASI), more structure is needed. Clearer definitions and divisions will help us discuss more effectively the trade-offs between the economic value and economic costs that each may bring. For example, AGI may provide economic value to a subset of users (just a partial belief system), while ASI may exhibit unstoppable compounding effects and change the world, our belief systems, and our social fabric. I don't think ASI is possible with expansion transformers alone; but unfortunately, as some might say, that's just my atheistic belief.

Lost Faith

The AI community cannot resolve this holy war in the short term; there are no facts to present in this emotional battle. Instead, we should turn our attention to AI questioning its response to the Law of Expansion What does faith mean? The loss of faith could trigger a ripple effect beyond large language models (LLMs), affecting all industries and markets.

It must be noted that in most areas of AI/ML, we have not yet thoroughly explored the laws of scaling; there will be more miracles to come. However, if doubt does creep in, it will be a big surprise for investors and It will become harder for builders to maintain as high a level of confidence in the ultimate performance state of "early-in-the-curve" categories like biotech and robotics, if we see large language models start to slow down and drift away. chosen path, the belief systems of many founders and investors in adjacent areas will collapse

Whether that is fair is another question

There is a view that "general artificial intelligence" naturally requires larger scales, and therefore the "quality" of specialized models should be demonstrated at smaller scales, making them less accessible before they can provide real value. Encountering a bottleneck. If a domain-specific model only ingests a portion of the data, only a portion of the computing resources are needed. To achieve feasibility, shouldn’t there be plenty of room for improvement? This makes intuitive sense, but we’ve repeatedly found that the key is often not there: including relevant or seemingly irrelevant data often leads to improvements. The performance of seemingly unrelated models, for example, appears to help improve broader inference capabilities. style="text-align: left;">In the long run, the debate about specialization models is probably irrelevant. The ultimate goal of anyone building an ASI (super artificial intelligence) is likely to be a self-replicating, artificial intelligence. Self-improving entities with unlimited creativity in various fields Holden Karnofsky, former OpenAI board member and founder of Open Philanthropy, calls this creation "PASTA" (Process of Automated Scientific and Technological Advancement). 's original profit plan seemed to rely on a similar principle: "Build AGI and then ask how it would pay off. "This is eschatological artificial intelligence, the ultimate destiny.

The success of large AI labs like OpenAI and Anthropic has inspired capital markets to support similar “Open in X fieldAI” labs whose long-term goals are around building “AGI” within their specific industry vertical or domain. The extrapolation of this scale breakdown will lead to a paradigm shift away from OpenAI simulations and toward product-focused companies ——I raised this possibility at Compound’s 2023 annual meeting

Unlike the eschatological model, these companies must demonstrate a sequence of progress. They will be companies built on scale engineering problems, rather than scientific organizations doing applied research with the ultimate goal of building a product.

< p style="text-align: left;">In science, if you know what you're doing, you shouldn't be doing it. In engineering, if you don't know what you're doing, you shouldn't either. It shouldn't be done - Richard HammingBelievers are unlikely to lose their sacred faith any time soon. As stated earlier, as the religion proliferated, they codified a script for life and worship and a set of heuristics. They built physical Monuments and infrastructure that reinforce their strength and intelligence and show that they "know what they're doing"

In a recent interview, Sam Altman. Said this when talking about AGI (emphasis on us):

This is the first time I feel like we really know what to do. It's still a lot of work to build an AGI, but I think there are some known unknowns. We basically know what to do, and it's going to take a while; it's going to be difficult, but it's also very exciting.

Trial

< p style="text-align: left;">When questioning "Bitter ”, Extension Skeptics are reckoning with one of the most profound discussions of the past few years. Each of us has pondered this question in some form: What would happen if we invented God? ? What will happen if AGI (Artificial General Intelligence) really and irreversibly rises?Like all unknown and complex topics, we quickly store our specific responses in our brains: some despair at their impending irrelevance, most anticipate destruction and prosperity. Mixed, the final group expects humans to do what we do best, continue to find problems to solve and solve problems of our own creation, resulting in pure abundance.

Anyone with a big stake would like to be able to predict what the world will look like for them if the scaling laws hold and AGI arrives in a few years look. How will you serve this new God, and how will this new God serve you?

But what if the stagnant gospel drives away the optimists? What if we begin to think that maybe even God is declining? In a previous article "Robot FOMO, the Law of Scale and Technology Forecasts", I wrote:

I sometimes wonder what will happen if the law of expansion does not hold What, is this going to be similar to the impact that lost revenue, slower growth and rising interest rates have had on many technology sectors. I also sometimes wonder whether the law of scaling holds true at all, and whether this will be similar to the commoditization curves of pioneers in many other fields and their value capture.

"The good thing about capitalism is that no matter what, we spend a lot of money to find out."

For founders and investors, the question becomes: What happens next? Candidates in every vertical who have the potential to be great product builders are becoming known. There will be many more like this in the industry, but the story is already starting to unfold. Where do new opportunities come from?

If expansion stalls, I expect to see a wave of closures and mergers. The remaining companies will increasingly shift their focus to engineering, an evolution we should anticipate by tracking talent flows. We're already seeing some signs that OpenAI is heading in this direction, as it increasingly productizes itself. This shift will open up space for the next generation of startups to outperform incumbents in their attempts to forge new paths by relying on innovative applied research and science, rather than engineering.

Lessons

My view on technology is that anything that seems to obviously have a compounding effect usually doesn't last very long, and a common point everyone makes is that any business that seems to obviously have a compounding effect, oddly enough, doesn't last very long. Far below the expected speed and scale of development.

Early signs of the split often follow predictable patterns that serve as a framework for continuing to track the evolution of The Bitter.

It usually begins with the emergence of competing explanations, whether for capitalist or ideological reasons. In early Christianity, differing views about the divinity of Christ and the nature of the Trinity led to schisms, producing vastly different interpretations of Scripture. In addition to the schism in AI we’ve already mentioned, there are other rifts that are emerging. For example, we see a subset of AI researchers rejecting the core orthodoxy of transformers and turning to other architectures such as State Space Models, Mamba, RWKV, Liquid Models, etc. While these are just soft signals for now, they show the beginnings of heretical thinking and a willingness to rethink the field from its first principles.

Over time, the prophet's impatient remarks can also lead to people's distrust. When a leader's predictions don't come true, or divine intervention doesn't come as promised, it sows seeds of doubt.

The Millerite movement had predicted the return of Christ in 1844, but the movement fell apart when Jesus did not arrive as planned. In the tech world, we often bury failed prophecies in silence and allow our prophets to continue painting optimistic, long-term versions of the future even as scheduled deadlines are repeatedly missed (Hi, Elon). However, belief in the expansion law may face a similar collapse if not supported by continued improvements in the performance of the original model.

A corrupt, bloated or unstable organization is susceptible to the influence of apostates. The Protestant Reformation gained ground not only because of Luther's theological views, but also because it emerged during a period of decline and turmoil for the Catholic Church. When cracks appear in mainstream institutions, long-standing "heretical" ideas suddenly find fertile ground.

In the field of artificial intelligence, we may look at smaller models or alternative methods that achieve similar results with less computation or data, such as from Work done by various corporate labs and open source teams such as Nous Research. Those who push the limits of biological intelligence, overcoming obstacles long considered insurmountable, may also create a new narrative.

The most direct and timely way to observe the onset of transformation is to follow the practitionerstrends. Before any formal schism, scholars and clergy often maintained heretical views in private while appearing compliant in public. Today’s equivalent might be some AI researchers who ostensibly follow the scaling laws but secretly pursue radically different approaches, waiting for the right moment to challenge the consensus or leave their labs in search of theoretically wider horizons.

The tricky thing about orthodoxies about and technology is that they are often partly true, just not as universally true as their most devoted believers believe. Just as fundamental human truths are woven into their metaphysical framework, the laws of expansion clearly describe how neural network learning really works. The question is whether this reality is as complete and immutable as the current enthusiasm suggests, and whether these institutions (AI labs) are nimble and strategic enough to bring the enthusiasts along. At the same time, building the printing press (chat interface and API) that allows knowledge to spread, allows their knowledge to spread.

Endgame

" It is true in the eyes of ordinary people, but false in the eyes of wise men. , is useful in the eyes of rulers" - Lucius Annaus Seneca

Yes. A perhaps outdated view of institutions is that once they reach a certain size, they, like many human-run organizations, tend to succumb to the survival motive of trying to survive the competition. In the process, they ignore truth and great motives (which are not mutually exclusive).

I once wrote an article about how capital markets have become information cocoons driven by narratives, and incentives tend to perpetuate those narratives. The consensus on the Law of Expansion gives an ominous sense of similarity—a deeply ingrained belief system that is mathematically elegant and extremely useful in coordinating large-scale capital deployments. Like many frameworks, it may be more valuable as a coordination mechanism than as a fundamental truth.