Author: 0xhhh Source: :

The operating principle of Provider and Action

The operating principle of Evaluator

< p style="text-align: left;">The design ideas of Eliza MemoryThis is the first article that mainly introduces: the operating principles of Provider and Action

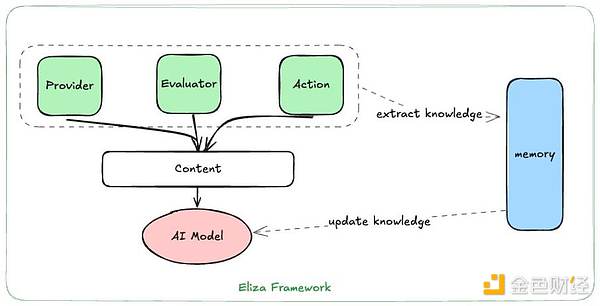

p>1. The architecture of Eliza is as follows, which is mainly divided into 3 partsThe top layer is abstracted into Provider, Evaluator and Action, which respectively correspond to the human ability to obtain information (eyes obtain vision) information, the ears acquire auditory information, etc. ), as well as human execution capabilities based on information (such as judging the future of BTC through market information), and Evaluator is only similar to human thinking ability, extracting knowledge from massive amounts of information through thinking to form personal cognition.

The bottom layer is different AI Models: currently the Eliza framework supports most AI Models on the market, such as openai, claude, gemini, gork, xai, etc. , this human-like brain is the key processing module for all decision-making.

memory allows the Ai Agent started through the Eliza framework to have the ability to break out of the Content Limitation restriction, because the AI can use the information obtained from the environment during the Provider stage. The information resulting from the execution of Action is compressed and stored in Memory; and some key information during dialogue or any other interaction with humans can also be extracted through Evaluator (this will be introduced in detail in the next Thread)

2. In the next section we will introduce "Provider" in detailand the operating principle of "Action" "Provider"We need to think about three questions about Provider:

Why need Provider (Why does the Eliza framework design the Provider component)?

How does AI understand the information provided by the Provider?

How to invoke Provider (How does AI in the Eliza framework obtain information through Provider)?

Why need Provider?

Provider is mainly used to solve some information that we use prompt to let AI The problem of inaccurate and insufficient acquisition is that because the models we use now are all large general models, the acquisition of information in specific fields sometimes has problems that are not comprehensive enough.

For example, the following code is the implementation of TokenProvider in Eliza. It will eventually get the key information of a Token at multiple latitudes on the chain through the API we provide. , for example, who are the top ten holders of this token, how many shares of the token each person holds, the 24h price change of this token, and other information will eventually be returned to the AI Model in the form of text, so that the AI Model You can use this information to make some key decisions about whether to buy meme tokens.

But if we directly tell AI through Prompt to help me obtain the corresponding information, you You will find that AI will provide us with the corresponding code (and sometimes the code provided by AI may not necessarily run, and the errors generated by the corresponding code running need to be submitted to AI to finally make the code run smoothly), but we still need to deploy it to the blockchain environment (and we also need to provide reliable API-KEY).

For example, the following example:

So in order to ensure the smoothness of obtaining data, in Eliza's framework this part of the code for obtaining data is encapsulated into the definition of Provider Next, we can easily obtain the asset information on solona in any account, so this is the core reason why need Provider.

How does AI Understand the information provided by Provider?

The information obtained by the Eliza framework through the Provider will eventually be returned to the AI Model in the form of text (natural language), because the AI Model requires the format of the requested information to be natural language p>

How to invoke Provider (How does AI in the Eliza framework obtain information through Provider)?

Currently Eliza For Providers in the framework, although corresponding interface abstractions are provided, the current calling method of Providers is not modular. There are still specific Actions that directly call the corresponding Providers to obtain according to their own information needs. The relationship diagram is as follows:

Suppose we have a BuyToken Action when it is judging whether it should buy a Token based on human recommendations , he will request during the execution of this Action TokenProvider and WalletProvider provide information. TokenProvider will provide information to assist the AI Agent in judging whether the Token is worth buying. Wallet Provider will provide private key information for transaction signature and also provide information about the assets available in the wallet.

"Action".You can easily find the definition of Action at the following Github link, but it will be difficult to understand if you don't look at the code in depth:

Why need Action? (Why does the Eliza framework need Action)

How to Invoke Action? (How does the Eliza framework allow AI to call Action)

What exactly does the Eliza Framework Action perform?

How to make AGI understand what he just called? What does Action do?

Why Need Action? (Why does the Eliza framework need to abstract Action?)

If I tell AI: my private key

0xajahdjksadhsadnjksajkdlad12612

There are 10 sol, can you buy 100 Ai16z tokens for me?

Claude's reply is as follows:

Obviously the operation of giving the private key in this way is not secure, and it is also difficult for AGI to perform such on-chain operations.

Here we can further ask AGI: Can you implement the corresponding execution code for us: When we have Sol in our wallet, I hope to buy all the Sol in the wallet into the meme tokens I specify.

Claude certainly has this ability, but we still need to guide him many times before he can finally Get code that satisfies us

So we can encapsulate the code given by AI into an Action of Eliza, and give someExample of Prompt to help AI understand when I should call this Action.

(And in real usage scenarios, the operations we want to do are much more complicated than this. For example, for a Swap transaction, we hope to have slippage limits, so these When the conditional restrictions are left to the AI large model to complete, it is actually difficult for us to ensure that every element can meet our requirements after the execution process).

How to Invoke Action? (How the Eliza framework allows AI to call Action)

The following is the Eliza framework, which is used to allow the AI Model to create a meme token in Pumpfun and buy it Prompt Example of the meme token of a certain value. When we provide these Examples in the corresponding Action, the AI Agent will know that when similar content appears during subsequent interactions with humans, it will be because of the type of content we provide. PromtExapmle Know which Action to call.

But the Eliza framework supports multiple Actions at the same time, because it also provides the following HandlerMessageTemplate to allow the AI Model to select the appropriate Action to call.

In fact, this Template rearranges all the data and updates the data. Logically provided to the AI Model, so that the AI Model can make more accurate calls to these predefined actions. (This is also difficult for us to do directly using the AI Model client)

What exactly does the Eliza frame Action perform?

https://github.com/elizaOS/eliza/blob/main/packages/plugin-solana/src/actions/pumpfun.ts#L279

The specific example will be explained with the example of Pumpfun Action. Its process is as follows:

Get information from WalletProvider and TokenProvider

Generate transactions to create MemeToken and purchase MemeToken

Sign the transaction and send it to the chain

Call the callback function to process the results after the Action is executed.

In fact, the core consists of two parts. One part is to obtain information from the Provider and then generate the operation function to perform the action.

How to let AGI understand what the Action it calls does?

If this problem is not solved, then we will not be able to let AI understand and perform relevant tasks.

The answer is as follows: After we execute the Action, we will use text to summarize the results of the action, and add the results to the AI's memory.

The details are as follows: The fourth parameter of the Action's Handle function is a callback function. We will define the callback function to add the execution results to the Memory module of the AI Model. middle.

The callback function is defined as follows:

The complete Action and Provider architecture of Eliza is as follows: