Author: Qin Jingchun

In the previous article, we discussed how decentralized AI became a key component of the implementation of the Web3 value Internet, and pointed out that AO + Arweave provides an ideal infrastructure for this ecosystem with its technological advantages such as permanent storage, hyper-parallel computing and verifiability. This article will further focus on the technical details of AO + Arweave, and through comparative analysis with mainstream decentralized platforms, it will reveal its unique advantages in supporting the development of AI, and explore its complementary relationship with vertical decentralized AI projects.

In recent years, with the rapid development of AI technology and the continuous increase in the demand for large-scale model training, decentralized AI infrastructure has gradually become a hot topic of industry discussion. Although traditional centralized computing platforms are constantly upgrading their computing power, their data monopoly and high storage costs are increasingly showing their limitations. On the contrary, a decentralized platform can not only reduce storage costs, but also ensure that data and computing are not tampered with through a decentralized verification mechanism, thus playing an important role in key links such as AI model training, inference and verification. In addition, Web3 is currently in data fragmentation, low DAO organizational efficiency, and poor interoperability of various platforms. Therefore, it must be integrated with decentralized AI to further develop!

This article will start from the four dimensions of memory limitation, data storage, parallel computing power and verifiability, compare and analyze the advantages and disadvantages of each mainstream platform, and discuss in detail why the AO+Arweave system has shown obvious competitive advantages in the field of decentralized AI.

1. Comparative analysis of various platforms: Why is AO+Arweave unique?

1.1 Memory and computing power requirements

As the scale of AI models continues to expand, memory and computing power have become key indicators for measuring platform capabilities. Taking running relatively small models (such as Llama-3-8 B) as an example, it requires at least 12 GB of memory; while models like GPT-4 with more than one trillion parameters have even more amazing requirements for memory and computing resources. During the training process, a large number of matrix operations, backpropagation, and parameter synchronization operations require full use of parallel computing capabilities.

AO+Arweave : AO through itsThe row computing unit (CU) and Actor model can split tasks into multiple subtasks and execute simultaneously, realizing fine-grained parallel scheduling. This architecture allows not only the parallel advantages of hardware such as GPU can be fully utilized during the training process, but also significantly improve efficiency in key links such as task scheduling, parameter synchronization and gradient update.

ICP: Although the subnet of ICP supports a certain degree of parallel computing, when executed internally by a unified container, it can only achieve coarse-grained parallelism, which is difficult to meet the needs of fine-grained task scheduling in large-scale model training, resulting in insufficient overall efficiency.

Ethereum and Base chain: Both adopt a single-threaded execution model. The original intention of the architecture is mainly aimed at decentralized applications and smart contracts, and does not have the high parallel computing capabilities necessary to train, run and verify complex AI models.

Computing power demand and market competition

With the popularity of projects such as Deepseek, the threshold for training large models has been continuously lowered, and more and more small and medium-sized companies may join the competition, resulting in an increasingly shortage of computing power resources in the market. In this case, decentralized computing power infrastructure like AO with distributed parallel computing power will become increasingly popular. As the infrastructure of decentralized AI, AO+Arweave will become the key support for the implementation of Web3 value Internet.

1.2 Data storage and economy

Data storage is another crucial indicator. Traditional blockchain platforms, such as Ethereum, are usually only used to store critical metadata because of their extremely high on-chain storage costs, and transfer large-scale data storage to off-chain solutions such as IPFS or Filecoin.

Ethereum platform: Relying on external storage (such as IPFS, Filecoin) to save most data. Although it can ensure the immutability of data, the high on-chain write cost makes it impossible for large data mass storage to be directly implemented on-chain.

AO+Arweave: Use Arweave's permanent low-cost storage capabilities to achieve long-term archive and immutability of data. For large-scale data such as AI model training data, model parameters, training logs, etc., Arweave not only ensures data security, but also manages subsequent model lifecycleProvide strong support. At the same time, AO can directly call the data stored in Arweave to build a complete economic closed loop of data assets, thereby promoting the implementation and application of AI technology in Web3.

Other platforms (Solana, ICP): Although Solana has been optimized through an account model in state storage, large-scale data storage still needs to rely on off-chain solutions; while ICP adopts built-in container storage and supports dynamic expansion, but long-term storage of data requires continuous payment of Cycles, which is relatively complex in overall economics.

1.3 The importance of parallel computing capabilities

In the process of training large-scale AI models, parallel processing of computing-intensive tasks is the key to improving efficiency. Splitting a large number of matrix operations into multiple parallel tasks can significantly reduce time costs while making full use of hardware resources such as GPUs.

AO: AO realizes fine-grained parallel computing through independent computing tasks and message delivery coordination mechanisms. Its Actor model supports splitting a single task into million-level child processes and efficient communication between multiple nodes. Such an architecture is particularly suitable for large model training and distributed computing scenarios. It can theoretically achieve extremely high TPS (number of transactions processed per second). Although it is actually subject to I/O and other restrictions, it is far beyond traditional single-threaded platforms.

Ethereum and Base chain: Due to the single-threaded EVM execution mode, these two seem to be incompetent when facing complex parallel computing needs and cannot meet the requirements of AI big model training.

Solana and ICP: Although Solana's Sealevel runtime supports multi-threaded parallelism, the parallel granularity is relatively coarse, while ICP is still dominated by single threads in a single container, which makes there a significant bottleneck when dealing with extreme parallel tasks.

1.4 Verification and System Trust

A major advantage of decentralized platforms is that through global consensus and an immutable storage mechanism, the credibility of data and calculation results can be greatly improved.

Ethereum: Through global consensus verification and zero-knowledge proof (ZKP) ecosystem ensures that the execution of smart contracts and data storage are highly transparent and verifiable, but the corresponding verification costs are high.

AO+Arweave: AO builds a complete audit chain by storing all computing processes in Arweave and ensuring the reproduction of results with the help of a "deterministic virtual machine". This architecture not only improves the verifiability of the calculation results, but also enhances the overall trust of the system, providing strong guarantees for AI model training and inference.

2. The complementary relationship between AO+Arweave and vertical decentralized AI projects

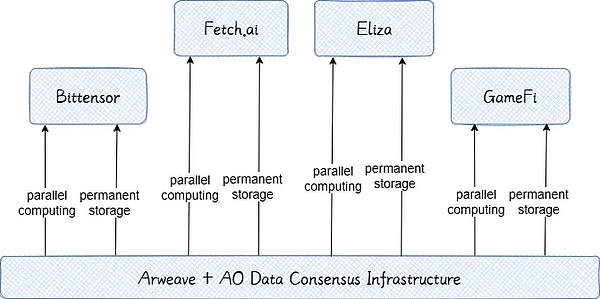

In the field of decentralized AI, vertical projects such as Bittensor, Fetch.ai, Eliza and GameFi are actively exploring their respective application scenarios. As an infrastructure platform, AO+Arweave's advantage lies in providing efficient distributed computing power, permanent data storage and full-chain audit capabilities, which can provide the necessary basic support for these vertical projects.

2.1 Example of technical complementarity

Bittensor:

Participants in Bittensor need to contribute computing power to train AI models, which puts high demands on parallel computing resources and data storage. AO's hyperparallel computing architecture allows many nodes to perform training tasks simultaneously in the same network, and quickly exchange model parameters and intermediate results through an open messaging mechanism, thereby avoiding bottlenecks caused by sequential execution of traditional blockchains. This lock-free concurrency architecture not only improves model update speed, but also significantly improves overall training throughput.

At the same time, Arweave provides a perfect storage solution for critical data, model weights, and performance evaluation results. Large data sets generated during training can be written to Arweave in real time. Because its data is immutable, any newly added node can obtain the latest training data and model snapshots, ensuring that network participants train together on a unified data basis. This combination not only simplifies the data distribution process, but also provides a transparent and reliable basis for model version control and result verification, allowing the Bittensor network to achieve the computing efficiency of approximate centralized clusters while maintaining the advantages of decentralization, thus greatly promoting the performance of decentralized machine learning.limit.

Fetch.ai's autonomous economic agents (AEAs):

In the multi-agent collaboration system Fetch.ai, the combination of AO+Arweave can also show excellent synergies. Fetch.ai builds a decentralized platform that enables autonomous agents to collaborate on-chain economic activities. This type of application requires handling concurrent operation and data exchange of a large number of agents at the same time, and has extremely high requirements for computing and communication. AO provides a high-performance operating environment for Fetch.ai. Each autonomous agent can be regarded as an independent computing unit in the AO network. Multiple agents can execute complex operations and decision logic in parallel on different nodes without blocking each other. The open messaging mechanism further optimizes communication between agents: the agent can exchange information asynchronously through the on-chain message queue, thereby avoiding the delay problem caused by the global state update of traditional blockchains. With the support of AO, hundreds of Fetch.ai agents can communicate, compete and cooperate in real time, simulate the rhythm of economic activities close to the real world.

At the same time, Arweave's permanent storage capability empowers Fetch.ai's data sharing and knowledge retention. Important data generated or collected by each agent during operation (such as market information, interaction logs, agreement agreements, etc.) can be submitted to Arweave for storage, forming a permanent public memory bank that can be retrieved at any time by other agents or users, without trusting the reliability of the centralized server. This ensures that records of inter-agent collaboration are open and transparent—for example, once a proxy publishes a terms of service or transaction quote is written to Arweave, it becomes a public record recognized by all participants and will not be lost due to node failure or malicious tampering. With the help of AO's high concurrent computing and Arweave's trusted storage, the Fetch.ai multi-agent system can achieve unprecedented depth of collaboration on the chain.

Eliza Multi-Agent System:

Traditional AI chatbots usually rely on the cloud, process natural language through powerful computing power, and use databases to store long-term conversations or user preferences. With the help of AO's hyper-parallel computing, the on-chain intelligent assistant can disperse task modules (such as language understanding, dialogue generation, sentiment analysis) to multiple nodes for parallel processing, and can respond quickly even if a large number of users ask questions at the same time. AO's messaging mechanism ensures efficient collaboration between modules: for example, language understanding module extraction languageAfter the meaning is asynchronous message, the result is transmitted to the response generation module, so that the dialogue process under the decentralized architecture is still smooth. At the same time, Arweave acts as Eliza's "long-term memory bank": all user interaction records, preferences and new knowledge learned by assistants can be encrypted and permanently stored. No matter how long the interval is, the previous context can be retrieved when the user interacts again, achieving personalized and coherent responses. Permanent storage not only avoids memory loss caused by data loss or account migration in centralized services, but also provides historical data support for the continuous learning of AI models, making the on-chain AI assistant "the smarter the more you use it."

GameFi Real-time Agent Application:

In decentralized games (GameFi), the complementary features of AO and Arweave play a key role. Traditional MMOs rely on centralized servers to perform a large number of concurrent computing and state storage, which is contrary to the concept of blockchain decentralization. AO proposes to disperse game logic and physical simulation tasks to decentralized networks in parallel processing: for example, in the on-chain virtual world, scene simulation, NPC behavior decisions and player interaction events in different regions can be calculated simultaneously by each node, and cross-regional information is exchanged through message delivery to jointly construct a complete virtual world. This architecture abandons the single-server bottleneck, allowing games to linearly expand computing resources as the number of players increases, and maintain a smooth experience.

At the same time, Arweave's permanent storage provides reliable state records and asset management for the game: key states (such as map changes, player data) and important events (such as rare prop acquisition, plot progress) are regularly solidified into on-chain evidence; the metadata and media content of player assets (such as character skins, props NFTs) are also directly stored to ensure permanent ownership and tamper-proof. Even if the system is upgraded or nodes are replaced, the historical state saved by Arweave can still be restored, ensuring that player achievements and property are not lost due to technological changes: no player wants these data to disappear suddenly, and there have been many similar events before, such as: many years ago, Vitalik Buterin was angry when Blizzard suddenly canceled the magician's life straw skill in World of Warcraft. In addition, permanent storage also enables the player community to contribute to the game chronicle, and any important event can be retained on the chain for a long time. With the help of AO's high-intensity parallel computing and Arweave's permanent storage, this decentralized gaming architecture effectively breaks through the bottlenecks in performance and data persistence of traditional models.

2.2 Advantages of ecosystem integration and complementary

AO+Arweave not only provides infrastructure support for vertical AI projects, but also strives to build an open, diverse and interconnected decentralized AI ecosystem. Compared with projects that focus on a certain field, AO+Arweave has a wider ecosystem and more application scenarios. Its goal is to build a complete value chain covering data, algorithms, models and computing power. Only in such a huge ecosystem can the potential of Web3 data assets be truly unleashed and a healthy and sustainable decentralized AI economy are formed.

3. Web3 Value Internet and Permanent Value Store

The arrival of the Web3.0 era marks that data assets will become the most core resource in the Internet. Similar to Bitcoin network storage "digital gold", Arweave provides permanent storage services that enable valuable data assets to be preserved for a long time and cannot be tampered with. At present, the monopoly of Internet giants over user data makes it difficult to reflect the value of personal data. In the Web3 era, users will have data ownership and data exchange will be effectively realized through token incentive mechanisms.

Attributes of value store:

Arweave achieves strong scalability through Blockweave, SPoRA and bundling technologies, especially in large-scale data storage scenarios. This feature allows Arweave not only to undertake the task of permanent data storage, but also provides solid support for subsequent intellectual property management, data asset transactions and AI model lifecycle management.

Data Asset Economy:

Data Assets are the core of the Web3 Value Internet. In the future, personal data, model parameters, training logs, etc. will become valuable assets, and efficient circulation will be achieved through token incentives, data rights confirmation and other mechanisms. AO+Arweave is the infrastructure built on this concept, with its goal to open up the circulation channels of data assets and inject sustained vitality into the Web3 ecosystem.

IV. Risks and challenges and future prospects

Although AO+Arweave has shown many technical advantages, it still faces the following challenges in practice: 1. Complexity of economic model

AO's economic model needs to be deeply integrated with the AR token economic system to ensure low-cost data storage and efficient data transmission. This process involves incentive and punishment mechanisms between multiple nodes (such as MU, SU, CU). Security, cost and scalability must be balanced through a flexible SIV sub-staking consensus mechanism. In actual implementation, how to balance the number of nodes and task requirements and avoid idle resources or insufficient returns is an issue that project parties need to seriously consider.

2. The current AO+Arweave ecosystem mainly focuses on data storage and computing power support, and has not yet formed a complete decentralized model and algorithm market. If there is no stable model provider, the development of AI-Agent in the ecosystem will be restricted. Therefore, it is recommended to support decentralized model market projects through ecological funds, thereby forming high competitive barriers and long-term moats.

Although there are many challenges, with the gradual arrival of the Web3.0 era, the confirmation and circulation of data assets will promote the reconstruction of the entire Internet value system. As a pioneer in infrastructure, AO+Arweave is expected to play a key role in this change and help build a decentralized AI ecosystem and Web3 Value Internet.

Conclusion

Comprehensive detailed comparative analysis of four dimensions: memory, data storage, parallel computing and verifiability. We believe that AO+Arweave has shown obvious advantages in supporting decentralized AI tasks, especially in meeting the needs of large-scale AI model training, reducing storage costs and enhancing system trust. At the same time, AO+Arweave not only provides strong infrastructure support for vertical decentralized AI projects, but also has the potential to build a complete AI ecosystem, thereby promoting the closed-loop formation of economic activities of Web3 data assets, and thus bringing greater changes.

In the future, with the continuous improvement of economic models, the gradual expansion of ecological scale, and the deepening of cross-field cooperation, AO+Arweave+AI hasIt is expected to become an important pillar of the Web3 value Internet and bring new changes to data asset rights confirmation, value exchange and decentralized applications. Although we still face certain risks and challenges in the actual implementation process, it is precisely through continuous trial and error and optimization that technology and ecology will eventually usher in breakthrough progress.